The quest for a highly-available Homelab

As I mentioned in my last post, partly for practical reasons and partly for fun I've been trying to make some "critical" applications in my homelab highly available. Making apps highly available in a cluster is actually pretty simple - if they support multiple replicas, just scale up and if not.. you're sort of screwed. Making apps highly available across multiple clusters, however, isn't as easy.

The primary application that I want to make highly available is Vaultwarden. My wife and I use this as our primary password storage solution and it not being available can present pretty dire problems. I am especially fearful of being somewhere I can't easily access my nodes (i.e. on vacation) and experiencing a cluster-wide outage. Thus, my quest began to remove the home from homelab.

Take a look at my previous post (linked above) for details on how I set up data replication between clusters. This post will be focused more on the networking side of things.

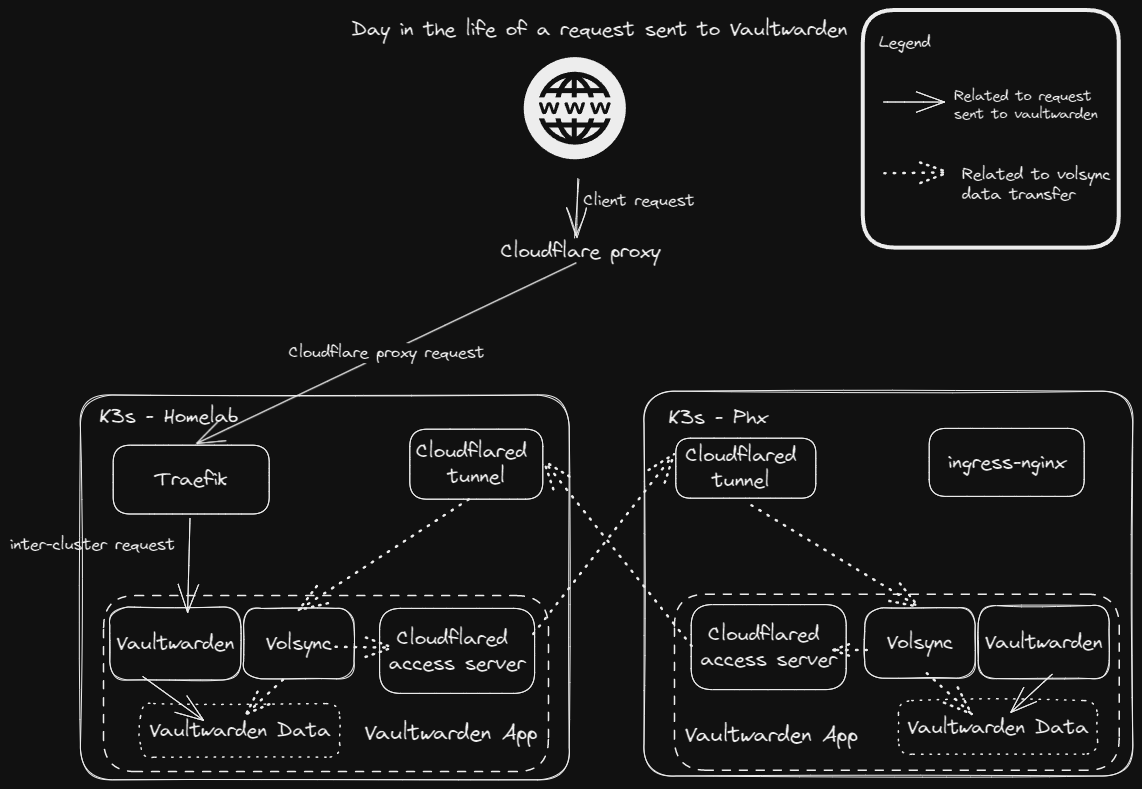

With replication set up, my Vaultwarden setup looked something like this:

Data was being synchronized between the two clusters, but there was no automatic failover for when my homelab cluster experienced an outage. I would have to manually change DNS records to point to my OCI Phoenix cluster if I wanted to bring my backup online. What I really needed was a global services loadbalancer.

From my research, I found I had basically 2 options - balance at the DNS level or stand up a physical loadbalancer machine outside of both clusters. Unfortunately, the best solutions like anycast load balancing were not compabitble with my homelab.

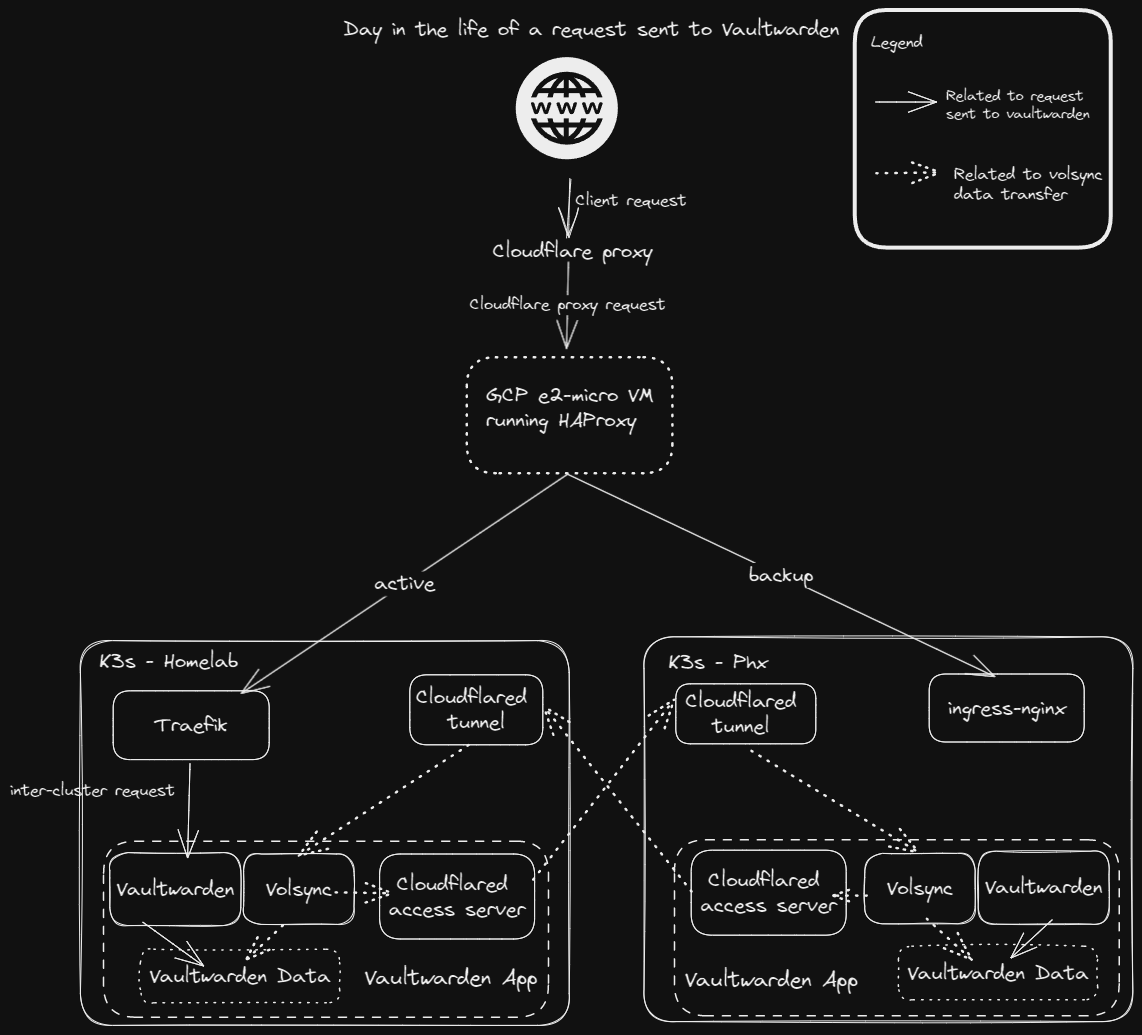

In the end, I decided to stand up a physical loadbalancer simply because it was free. I am using GCP with a e2-micro VM running HAProxy, which falls within their free-tier usage. Compared with DNS load balancing, this adds another hop to a network request (not great) but doesn't suffer downtime DNS loadbalancing solutions do while propagating their new records. My architecture with my HAProxy load balancer looks something like this:

My HAProxy configuration was nothing special, except for my health check for my Homelab cluster. I wanted to expose the Kubernetes healthz endpoint without actually exposing the whole API to the world, so I wrote a simple backend to perform the request for me (and, as a bonus, I could use it to simulate downtime). Then, I exposed this service to the internet so HAProxy could use it for its health checks. My final HAProxy configuration looks something like this:

frontend localhost

bind *:80

bind *:443

option tcplog

mode tcp

default_backend nodes

listen health_check_http_url

bind :8888

mode http

monitor-uri /healthz

option dontlognull

backend nodes

mode tcp

balance roundrobin

option ssl-hello-chk

option httpchk GET /healthz

server web01 1.2.3.4:443 weight 1 check port 1024 inter 5s rise 3 fall 2

server web02 5.6.7.8:443 backup weight 1 check check-ssl verify none inter 5s rise 3 fall 2And with that, its pretty much done! In reality, this setup is most useful for services like Wikis rather than databases, where the risk of data corruption is very high during the synchronization process. I attempt to mitigate this by not actually having a Vaultwarden instance in my OCI Phoenix cluster. Then, if there is an outage, I can scale it up quickly (potentially automated in the future, who knows ?)

Thanks for reading!

Member discussion