Global Services Load Balancer.. for a Homelab?

At first glance, a global services load balancer might seem like the last thing a homelab needs. After all, isn't the purpose of a homelab to be solely deployed in one place - your home? However, as one delves deeper into self-hosting, you might increasingly find yourself deploying critical workloads for your everyday life. Although its not fun to have a SLA with yourself (or your family ?), you might find it necessary for applications such as a password manager.

My password manager, Vaultwarden, was the catalyst for my investigation into "data-center" level redundancy for my homelab. Suffering an outage when I'm out of my home is simply not acceptable for an application that crucial to my family and I's daily life.

Strategies

When it comes to global service load balancing, there are generally two accepted strategies:

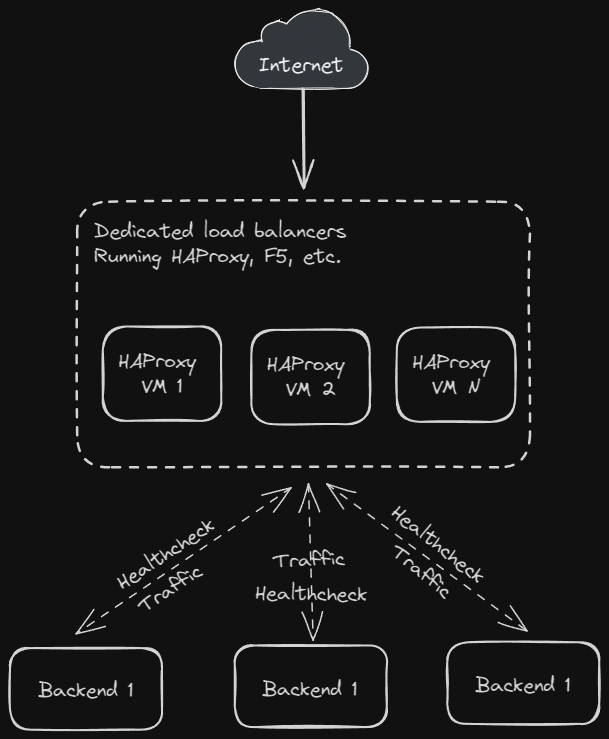

- Routing all traffic through a single (or cluster) of load balancing machines, which determine the health of their backends through their own health checks. This is the approach I first took, but it comes with several drawbacks, the greatest being that it necesitates another VM in another datacenter (or, more ideally, an entire cluster) dedicated to just load balancing. Considering that my goal is spend as little money as possible, that left me with my free Google Cloud Platform VM, which is tiny and only offers 100MB of bandwidth per month ?.

- Cleverly using DNS to advertise the endpoints for a workload. This takes a little bit more work to set up, but requires no dedicated hardware. For Kubernetes specifically, K8gb is the most mature product I could find - and even they have not released their 1.0 version yet.

Setup

For my prototype, I'm going to use my 2 Oracle Cloud clusters. First, we'll need to install K8gb using Helm. You can see the full values for one of my clusters here.

Reverse Proxy Considerations

Since my clusters sit behind a single, free load balancer and I don't want to pay for new load balancers, I use a reverse proxy for ingress traffic. Unfortunately, as of version 0.11.5, K8gb doesn't support reverse proxies - it will end up advertising the internal IP of your reverse proxy endpoint, rather the actual external endpoint. This is because it finds applications endpoints using ingress.status.loadBalancer. Fortunately, this is an easy fix to hack around. I added some simple code to read the actual external IP from an annotation, if it exists:

// GslbIngressExposedIPs retrieves list of IP's exposed by all GSLB ingresses

func (r *Gslb) GslbIngressExposedIPs(gslb *k8gbv1beta1.Gslb) ([]string, error) {

// check if we have the external IP annotation and use that instead

annotations := gslb.GetAnnotations()

if annotationValue, found := annotations[externalIPAnnotation]; found {

return []string{annotationValue}, nil

}

nn := types.NamespacedName{

Name: gslb.Name,

Namespace: gslb.Namespace,

}

gslbIngress := &netv1.Ingress{}

err := r.client.Get(context.TODO(), nn, gslbIngress)

if err != nil {

if errors.IsNotFound(err) {

log.Info().

Str("gslb", gslb.Name).

Msg("Can't find gslb Ingress")

}

return nil, err

}

var gslbIngressIPs []string

for _, ip := range gslbIngress.Status.LoadBalancer.Ingress {

if len(ip.IP) > 0 {

gslbIngressIPs = append(gslbIngressIPs, ip.IP)

}

if len(ip.Hostname) > 0 {

IPs, err := utils.Dig(ip.Hostname, r.edgeDNSServers...)

if err != nil {

log.Warn().Err(err).Msg("Dig error")

return nil, err

}

gslbIngressIPs = append(gslbIngressIPs, IPs...)

}

}

return gslbIngressIPs, nil

}If you want to use the same image I created, you can pull it from my internal registry: harbor.neilfren.ch/k8gb/k8gb:0.0.5

Exposing K8gb CoreDNS

K8gb packages a modified CoreDNS which will look at your GSLB CRDs and advertise the backends that are currently healthy. In order for the Internet to actually see this modified CoreDNS, you'll need to expose it. This article won't detail how to do that, but it's basically just opening port 53 and directing traffic from there to the K8gb CoreDNS service.

Instead of creating glue records in my DNS provider, I hardcoded them into my Kubernetes CoreDNS configuration. This is one less trip outside the cluster and marginally more secure:

- name: hosts

configBlock: |-

${SECRET_PHX_EXTERNAL_IP} gslb-ns-phx-neilfren-ch.${SECRET_DOMAIN}

${SECRET_SJ_EXTERNAL_IP} gslb-ns-sj-neilfren-ch.${SECRET_DOMAIN}

fallthroughWith the K8gb operator deployed and our modifications set up, all that's left is to create our GSLB CRD! Thankfully, it's pretty simple. For my prototype, I'm using my homepage since its a simple, static application. I also added an environment variable that shows which cluster it's being served from.

---

apiVersion: k8gb.absa.oss/v1beta1

kind: Gslb

metadata:

name: homepage

annotations:

k8gb.io/external-ip: "${SECRET_SJ_EXTERNAL_IP}"

spec:

ingress:

ingressClassName: cilium

rules:

- host: &host me.${SECRET_DOMAIN}

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: homepage

port:

name: http

tls:

- hosts:

- *host

strategy:

type: roundRobin

You can ready more about what strategy means in K8gb's docs. k8gb.io/external-ip is the annotation I added earlier that exposes the actual external IP of the application.

With the GSLB in place on both clusters, you should be able to see both your backends being advertised in the status of your GSLB, like so:

Finally, you just need to create the NS records on your DNS provider to delegate DNS to our exposed K8gb CoreDNS instance.

With that, all the pieces should be in place! You can see this in action here. Note the environment at the bottom of the page - you should see either Phoenix or San Jose. If you wait a while or clear your DNS cache and reload, you should eventually see the opposite cluster!

For a complete architecture overview, please see the K8gb docs. What I've implemented here generally follows the 2-cluster setup, except with Oracle Cloud instead of AWS.

Member discussion